Elliptic Curve ZK-Proof Acceleration on AMD Versal

We present a novel system demo accelerating elliptic curve cryptography on AMD's Versal heterogeneous compute platform.

1/23/24

Irreducible Team

One major category of zero-knowledge (ZK) proof systems, which includes the popular Groth16 and PLONK constructions, relies heavily on elliptic curve cryptography. The performance bottleneck in generating these types of proofs is a computation called multi-scalar multiplication (MSM). In the case of Groth16, for example, the MSM steps account for 80+% of the overall proving time.

Our industry has mounted a massive effort to improve the MSM performance using a variety of hardware accelerators. Notably, the ZPrize competition fueled research into optimized GPU and FPGA solutions, yielding a top, combined GPU submission that performed in only 2.28s a computation that takes 228s on a powerful 112-core CPU. At Irreducible, we investigated whether a new, promising high-performance hardware acceleration platform made by AMD, called Versal, could beat the state-of-the-art in GPU and FPGA performance.

The most efficient algorithm for MSM is the bucket method, also known as Pippenger’s algorithm. Given sufficient memory, this algorithm is bottlenecked by performing a large volume of parallel elliptic curve point additions. To evaluate the Versal architecture’s capability in accelerating MSM computation, we developed an end-to-end system demo that stress tests the throughput of elliptic curve point additions on inputs streamed from a CPU host. In this post, we provide background information on Versal, the programming model it enables, describe the design of our elliptic curve cryptography engine and the system demo, summarize the performance results we attained, and finally analyze the Versal platform as an accelerator for ZK computation.

AMD Versal and Heterogeneous Compute

Heterogeneous compute platforms combine multiple types of processing units into a single System-on-Chip device (SoC). Our demo targets the VCK5000 development platform from AMD’s new Versal line of adaptive SoCs. The VCK5000 offers three main processing types: traditional CPU processing (ARM cores), an FPGA fabric for highly parallel or customized processing, and a systolic array (DPU) architecture that AMD calls the “AI Engine.” These elements live on a shared silicon die and are interconnected with an ultra-high-bandwidth, programmable Network-On-Chip (NOC). This architecture unleashes a new dimension of hardware-software co-design compared to traditional accelerators, which communicate with the CPU via the motherboard using the PCIe protocol.

Versal ACAP platform and AI Engine array architecture (source: AMD).

Versal cards like the VCK5000 have a few notable advantages over GPUs when it comes to practical deployment. First and foremost, datacenter-ready GPUs are generally more power-hungry. For example, the Nvidia A100 consumes 300 W - 400 W, whereas the VCK5000 consumes 150 W - 200 W. Assuming a server chassis capable of hosting dual-socket systems with eight accelerator cards, we get a total power of 2.4 kW - 3.2 kW for the GPUs compared to 1.2 kW - 1.6 kW for Versal cards. Since both cards have passive cooling, GPU servers require much stronger airflow and higher-end power supply units capable of delivering high power at elevated temperatures. Beyond the power considerations, the Versal cards feature versatile networking capabilities that enable high-throughput communication between the cards, something often required for complex computations. Finally, at the time of writing this article, Nvidia’s A100 is roughly two times more expensive than AMD’s VCK5000 accelerator card. While we mainly focus on the raw compute performance evaluation, we find that the practical deployment, power consumption and pricing all favor the Versal chips.

Our demo primarily focuses on the AI Engine, with support from the FPGA fabric. The AI Engine is a systolic array architecture consisting of a rectangular grid of data processing units called AI Engine Tiles. Each AI Engine Tile has its own instruction memory and data memory, and adjacent tiles directly share data memory.

AI Engine tile architecture (source: AMD).

Our demo implements a pipelined elliptic curve multi-scalar multiplication (MSM) on the AI Engine systolic array. This design paradigm of pipelining is markedly different from the Single Instruction, Multiple Threads (SIMT) approach commonly employed in GPU MSM implementations. Unlike GPUs, where multiple threads execute the same operation in lockstep, each AI Engine Tile in our system has its own instruction memory and decoder, allowing for more versatile and independent processing. This architecture is analogous to an assembly line in a factory: data flows through the AI Engine Tiles, much like a car body moving through different stages of assembly. In our case, we represent the target elliptic curve operation as a data flow graph, where each tile performs a specific finite field operation required for the elliptic curve calculation, transforming the data before passing it to the next tile. The sequence of stages, or layers of the data flow graph, form a pipeline.

AI Engine Programming Model

The AI Engine offers a unique programming model since it blends high-level algorithmic concepts with low-level optimization. The programming is split into two phases: kernel programming and graph programming. AMD supports this programming model with a custom, proprietary toolchain for compiling C++ to run on the Versal platform.

Developers program the AI Engine kernels in C++ using intrinsics and custom data types provided by AMD. This phase focuses on adapting an algorithm of interest to the AI Engine’s unique ISA. The AI Engine ISA is specifically designed for AI inference and is missing many instructions common on CPUs and GPUs.

The main operation implemented for AI Engines is multiply-accumulate (MAC), a fundamental operation for linear algebra and AI inference. This instruction accepts three inputs. Two 128-bit vector multiplicand registers, each consisting of eight 32-bit signed integers. The product is computed and added to a 640-bit accumulator register consisting of eight 80-bit signed integers.

Kernel programming offers two layers of parallelism: SIMD instructions and very-long-instruction-word (VLIW) instruction-level parallelism. The former is well-known and involves instructions that operate on large registers, computing multiple results in parallel. The latter, VLIW processing, is common on GPU and TPU architectures. VLIW processing allows multiple instructions to begin execution concurrently. For example, an AI Engine Tile can perform a memory load, a MAC instruction, and a memory store all in the same cycle. Programming efficiently for VLIW architectures requires in-depth knowledge of the ISA, careful avoidance of data hazards, and an excellent compiler.

The same operation is convenient when multiplying multi-limb big integers, which is an essential step in elliptic curve point additions. In the schoolbook multiplication algorithm, MAC operations reduce register pressure since the result can stay in the accumulator register rather than spill to memory.

In contrast to kernel programming, AI Engine graph programming is more high-level. It focuses on linking AI Engine kernels into a final combined graph and constraining the placement and parameters of AI Engine kernels onto concrete AI Engine Tiles.

At the graph programming level, there are two layers of parallelism. First, kernels in an AI Engine graph form a pipelined network known as a Kahn process network. In other words, each AI Engine Tile works independently and can begin processing a new input as soon as it has passed an output to a neighboring tile. We use the pipeline formed by this process to structure our elliptic curve implementation. The second layer of parallelism is that multiple pipelines can be deployed on the same device. For example, we estimate we could deploy 23 of our AI Engine ECC pipelines (described in the next section) on the VCK5000 AI Engine.

The final stage of graph programming involves connecting AI Engine Tiles to the FPGA fabric. This stage involves a reprogrammable network-on-chip (NoC) and allows for sophisticated multiplexing. The programmable NoC can address packets to/from individual AI Tiles, which is convenient for multiplexing and demultiplexing the elliptic curve operations in the FPGA fabric.

Elliptic Curve AI Engine Pipeline

We implemented elliptic curve point addition over the BN254 G1 elliptic curve group, which has become a standard in ZK proof systems, as an AI Engine pipeline.

The elliptic curve point computations involve modular addition, subtraction, and multiplication with respect to the 254-bit base field modulus. Typical CPU and GPU software implements these field operations by representing each of the 254-bit finite field elements as an array of 32- or 64-bit unsigned integer limbs, depending on the architecture. There is a well-known method for computing modular multiplications called Montgomery multiplication, and a variant of the method exists that operates directly on this representation of big integers using unsigned integer limbs. This algorithm cannot be adapted well to the AI Engine on the VCK5000, however, because the device does not support vectorized multiplication of unsigned 32-bit integers, only of signed 32-bit integers. We worked around this hardware limitation by developing a novel variant of Montgomery multiplication that natively handles big integers represented with signed limbs.

Considering several point representations are possible for the target elliptic curve, we chose the XYZZ coordinate representation, as it requires the fewest number of expensive finite field multiplications to compute a mixed addition. Our elliptic curve point addition architecture is designed around a simple Kanh process network (KPN) modeling the elliptic curve point mixed addition algorithm. As documented on hyperelliptic.org, elliptic curve mixed addition is computed in the following way:

U2 = X2*ZZ1

S2 = Y2*ZZZ1

P = U2-X1

R = S2-Y1

PP = P^2

PPP = P*PP

Q = X1*PP

X3 = R^2-PPP-2*Q

Y3 = R*(Q-X3)-Y1*PPP

ZZ3 = ZZ1*PP

ZZZ3 = ZZZ1*PPP

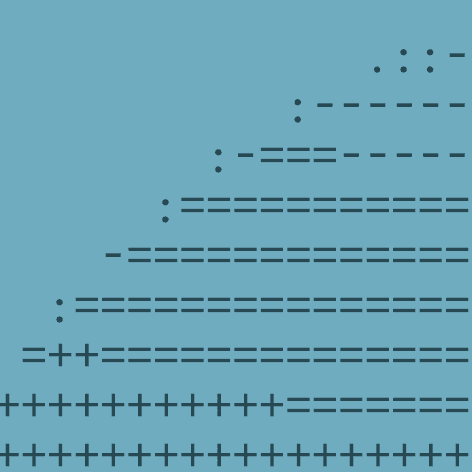

This algorithm can be split into 14 dyadic operations and 3 unary operations, where the operands and results are finite field elements in signed Montgomery form. A Kahn process network naturally models this computation. Below, we depict the Kahn process network for this computation. We note, however, that there are alternative ways to create a KPN for this computation. For example, finite field reduction can be considered a fundamental unary operation, but we decided to use full finite field operations for simplicity.

Anatomy of the elliptic curve point addition computation. The operations in maroon are on the critical path.

The resulting KPN has six source nodes, X1, Y1, ZZ1, ZZZ1, X2, and Y2. The first four correspond to the coordinates of the extended Jacobian point, and the latter two correspond to the affine addend. The KPN has four sink nodes, X3, Y3, ZZ3, and ZZZ3, corresponding to the coordinates of the resultant extended Jacobian point. The source and sink nodes receive data from the FPGA fabric over AXI stream ports.

The overall throughput of an AI Engine pipeline is determined by the cycle latency of the slowest stage. We carefully optimized the AI Engine kernels by vectorizing critical operations, analyzing the resultant VLIW assembly, and inspecting memory access patterns. The pipeline architecture is built on the observation that the AI Engine architecture has four 8-lane accumulator registers and can perform loads, stores, and computations in parallel due to the VLIW nature of the architecture. Thus, the AI Engine operates most efficiently on bundles of 32 finite field elements.

Accelerating the computation involves optimizing the C++ code into a form the compiler backend instruction scheduler can easily parallelize. With this in mind, it’s easy to see how a computation that loads 4x8 lane 32-bit words, applies 4x8 lane multiply-accumulate operations, and then stores the results is an easy program for the compiler instruction scheduler to optimize. In particular, the four instances of multiply-accumulate operations are independent, so the compiler can interleave these computations without worrying about data hazards.

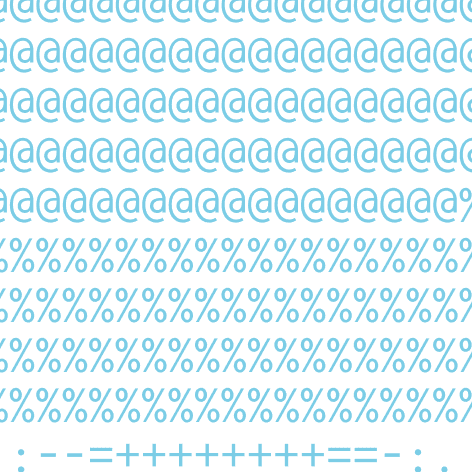

To adapt this approach to multi-limb big integers, we need to change the memory representation of the finite big integer to be more pleasant for the AI Engine ISA to work with. Traditionally, the memory representation for a finite field element would be 8 consecutive 32-bit words each representing a limb of the big integer. A list of 32 big integers in memory would then be 32 consecutive elements of this form. This approach is inefficient on vectorized processors.

Instead, we reshape the list of 32 big integers to consist of a 128 byte block of the 32 lowest limbs of each integer, then a block of the 32 second-lowest limbs, and so on. It is easiest to think of these 128 byte blocks as 4x8 grids of 32-bit limbs. The full 32 integer bundle can be thought of as a 4x8x8 cuboid by stacking these grids.

Memory layout of pipeline inputs. Traditional (left) vs cuboid (right).

This representation is convenient for implementing big integer multiplication. The 32x512 bit output of a 32-way 256-bit multiplier is a 4x8x16 cuboid constructed by multiplying slices of the two 4x8x8 operand cuboids. Furthermore, this approach can be adapted to implement 32-way vectorized Montgomery multiplication, 32-way vectorized finite field addition, and 32-way vectorized finite field subtraction. In practice, our kernels perform 4 instances of these 32-way operations in sequence to avoid overhead with double buffer locking and kernel initialization penalties.

System demo

We deployed our system demo on a VCK5000 card connected to a host system with an Intel i7-13700K CPU. The VCK5000 platform was the best choice available when we began this work over a year ago. AMD has since released a new line of devices that offer next-generation AIE-ML processors, such as the V70 described in a blog post by Ingonyama.

Our demo uses multiple AI Engine ECC pipelines in the AI Engine with simple passthrough blocks in the FPGA fabric. These passthrough blocks accept host DMA writes and push the written data into AXI stream interfaces. These AXI stream interfaces then feed data into the AI Engine. Similar passthrough blocks receive output from the AI Engine and forward it to the host.

To maintain simplicity in our demo, we chose to handle multiplexing across the AI Engine ECC pipelines via software, as opposed to using the AI Engine's built-in "packet split and packet merge" feature. This approach restricted us to 10 AI Engine ECC pipelines, instead of the maximum possible 23. However, this limitation can be resolved by employing the AI Engine's programmable NoC for multiplexing. We decided this limitation is inconsequential for a proof-of-concept given that the main limitation is the AI Engine's ISA, which we discuss in a later section.

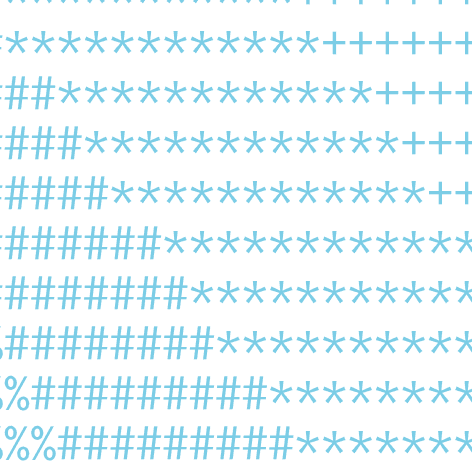

Since we only use 10 AI Engine ECC pipelines, many AI Engine Tiles are under-utilized for our demo. The demo uses roughly only 50% of the AI Engine’s compute power. The figure below is a rendering of the placement of logic on the AIE grid. The black region in the diagram shows the under-utilized tiles.

AI Engine kernel and memory placement for the demo.

Even with our highly optimized AI Engine graph, we found that the computation is heavily compute-bound. In other words, the limiting factor in performance is indeed the AI Engine point addition rather than the bandwidth from the FPGA fabric feeding inputs to the AIE array. If each pipeline required 8x fewer cycles and the AI Engine grid could fit 340 pipelines, then the AI Engine would be able to match the maximum theoretical throughput of the FPGA fabric.

Performance results

We measured the performance of our AI Engine ECC pipelines under two scenarios. First, we measured the performance without PCIe transfers. In this scenario, we achieved near perfect linear scaling. In other words, a 10 pipeline implementation was exactly 10x faster than a 1 pipeline implementation. One pipeline took 30.778 s to compute 2²⁶ point additions and ten pipelines took 2.982 s to compute 2²⁶ point additions. The graph below clearly shows that the throughput of our design scales linearly with the number of pipelines.

Performance for implementation without PCIe transfers.

We also measured the performance of one AI Engine ECC pipeline sending points from an x86-64 host over PCIe. This implementation is not indicative of practical performance since a production implementation will store coordinates on-card in DRAM and will fetch data with the FPGA fabric instead using PCIe. The graph below shows that the PCIe data fetching design becomes suboptimal when using 7 pipelines. The variability in throughput is caused by the PCIe transfers becoming a bottleneck when 7 AI Engine ECC pipelines are used. This issue will not apply to a production FPGA-fabric data-fetcher implementation.

Performance for implementation with PCIe transfers.

Our benchmarks reveal that one pipeline has a throughput of 2.2M mixed additions per second, and 10 pipelines have a combined throughput of 22.5M mixed additions per second. As explained in the “System Demo” section above, we estimate that 23 AI Engine ECC pipelines could fit within one VCK5000 device using the AI Engine’s “packet split and packet merge” feature. Assuming the perfect linear scaling trend continues, an implementation with 23 pipelines would yield 51.7M mixed additions per second. In comparison, Consensys’s gnark-crypto high-performance software implementation achieves 300M operations per second on a 112-core c2d-standard-112 instance on Google Cloud, and Matter Labs’s era-bellman-cuda GPU library performs 2.4B operations per second on an Nvidia A100.

Given that the FPGA fabric can feed 20 mixed addition inputs per 300MHz cycle, a theoretically optimal AI Engine implementation could perform 6B mixed additions per second. The disparity in performance between our implementation and the theoretical maximum is primarily due to the inflexibility of the AI Engine’s ISA. Certain operations that take one instruction on other architectures require 10-15 instructions to execute on the AI Engine. Our assessment is that several adaptations to the AI Engine’s ISA are needed to achieve this level of performance.

Challenges & Conclusions

First and foremost, we note that the AI Engine ISA is specifically designed and optimized for AI inference and not for general-purpose computations like GPUs are. The ISA poses significant challenges when implementing elliptic curve cryptography. In particular, VCK5000 does not support unsigned 32-bit addition, vectorized addition does not compute carries, and crucial bitwise operations are not natively supported. An adaptation of the ISA that better supports big integer arithmetic could significantly improve the throughput of this design.

The second challenge we faced is that the proprietary Versal toolchain is under-documented and buggy. We spent considerable time debugging compiler segfaults, reverse-engineering proprietary compiler internals, and solving toolchain-specific compiler bugs. We discovered that the Versal toolchain uses a two-stage compiler for AI Engine development. The first stage compiler converts the AI Engine graph and kernel code into multiple smaller C++ build directories, one to compile binaries for each tile. The AI Engine C++ code is transpiled into standard C++ during this process.This process was imperfect and under-documented. Many C++ features cause issues during the transpilation process. For example, AI Engine kernels can not easily be templatized and AI Engine graphs can not contain dynamically sized data structures (std::vector<kernel> is not allowed). These example bugs are the least complicated. We faced many more complicated bugs of this flavor.

Given the significant gap in computational efficiency between our experiment with the VCK5000 and the Z-Prize submissions, we conclude that NVidia GPU solutions will continue to outperform the AI Engine implementations on Versal for elliptic curve MSM accelerators in the near future. We found that the hardware design and ISA of the AI Engine processor are the main limitations in using the Versal AI Engine to accelerate elliptic curve cryptography. However, despite the development challenges with the Versal platform, our experience leaves us convinced of the massive potential for future heterogeneous compute platforms.